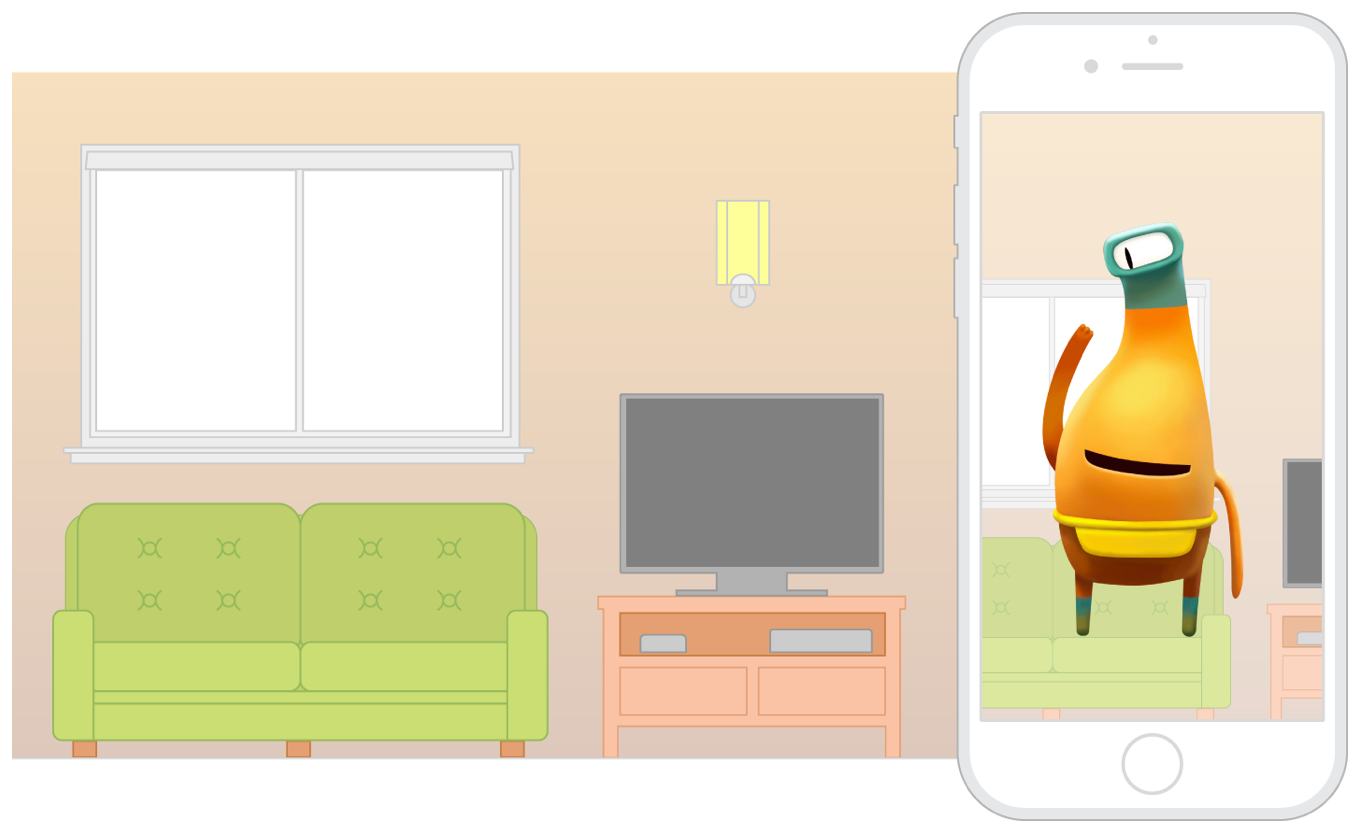

ARKit uses visual-inertial odometry (VIO)

About Augmented Reality and ARKit | Apple Developer Documentation

About Augmented Reality and ARKit | Apple Developer Documentation

Discover supporting concepts, features, and best practices for building great AR experiences.

Source: developer.apple.com/documentation/arkit/about_augmented_reality_and_arkit